Hi everyone, Luca Cigarini here from Threat Detection Team in Certego!

While using Zeek, we detected a security vulnerabilty that was patched in the latest LTS version of the software (5.0.10 and 6.0.1).

Since it was interesting, we decided to write a blog post about it. But, before delving into the security issue let's talk briefly about Zeek.

What is Zeek?

Zeek's developers define the software as:

a passive, open-source network traffic analyzer.

So Zeek can be used alongside IDSs(intrusion detection systems) to gather more data regarding suspicious or malicious activities. As a matter of fact, IDSs often provide only necessary data, so alerts are very light from this point of view. To perform a complete analysis, and so to decide whether the alert represents a false or a true positive, analysts need a huge amount of data though. Therefore Zeek is able to gather context data, vital to analysts' work.

File Extraction

A very cool feature provided by Zeek's File Analysis Framework is file extraction. As stated in the official documentation:

Zeek can carve files from network traffic.

So, for example, Zeek is able to extract files from HTTP connections.

This can be very useful to monitor file flowing through the network and, later, gather enriched data regarding the file it has just seen. This can be done, for example, by uploading the extracted file or hash on VirusTotal.

Since huge files will take up a lot of space on disk and require more time for processing, it can be useful to set a maximum extraction size for files. This way, only files smaller than this limit will be extracted.

Zeek provides a redefinable variable to achive that:

FileExtract::default_limitDefault value is 0, so unlimited.

We decided to set it to 10 MiB and that's when we stumbled upon a weird situation.

DoS vulnerability

Even though we set the FileExtract::default_limit to 10 MiB we still saw files bigger than the limit being extracted by Zeek.

So we decided to do a bit of troubleshooting.

We thought it was a misconfiguration in our Zeek set up. We re-tested our deployment and we didn't discover any issues with our configuration: files bigger than 10 MiB were not extracted.

At this point we took a closer look to logs produced by the File Analysis Framework (files.log).

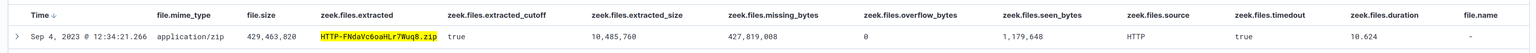

A file weighting 410 MiB was extracted by Zeek as we can see in the picture below.

By delving deeper into Zeek's file.log, we discovered that only ~1 MiB were seen of the 410 MiB! Also, according to Zeek, the software successfully extracted the first 10 MiB of data and then stopped processing the file.

So how have we ended up with a 410 MiB file?

We then decided to take a peek on the file raw data and we noticed that the first MiB was actual data while the rest was filled with zeros.

Looking at Zeek's source code helps us to better understand what causes this issue.

The Extract.cc file contains C++ code deputated to the file extraction. Here inside zeek::file_analysis::detail namespace we can find Extract class with two very important methods:

- bool DeliverStream(const u_char* data, uint64_t len)

- bool Undelivered(uint64_t offset, uint64_t len)

The first one write len number of bytes to of data to the extracted file. Basically it reconstruct the file from the connection/packets.

The second one write len number of undelivered bytes at offset distance from file start. This is where Zeek's failto check extracted file size. By taking a closer look of this method we can see the following statements:

if ( depth == offset ){

char* tmp = new char[len]();

if ( fwrite(tmp, len, 1, file_stream) != 1 ){

...

}

delete[] tmp;

depth += len;

}Basically, here developers instructed Zeek to write the len missing/undelivered bytes as zeroes into the extracted file. Without a check on the maximum extraction size this caused two issues:

- Extraction of very large files.

- A potential DoS (Denial of Service) issue. As a matter of fact:

Crafting files with large amounts of missing bytes

in them could cause Zeek to spend a long time processing data, allocate a lot of main memory, and write a lot of data to disk. Due to the possibility of receiving these packets from remote hosts, this is a DoS risk.

To overcome this issue, developers enforced file size limits before doing anything on the file. If file has not exceeded extracted size limit, the following statement is run:

fseek( file_stream, len + offset, SEEK_SET)Instead of actually writing zeroes on the file, they moved the file pointer to the end of undelivered bytes, creating a sparse file.

Proof of concept

To reproduce this bug take the following steps:

- Take a PCAP file with an HTTP connection containing a file (e.g. sniffing ubuntu iso download)

- Delete a chunk of the PCAP (e.g. an arbitrary amount of packets from the middle of the recording).

- Using Hosom file extraction module run Zeek in offline mode against our newly created PCAP file (e.g. zeek -C -r poc.pcap /path/to/local.zeek). Remember to import the module in local.zeek script file.

- Take a look at the extracted file with an hex editor (e.g. xxd).